Emotional, viral, and completely fake. How AI-generated images and fabricated family stories are dominating your feed and manipulating your emotions.

A white woman adopts a Black baby left in a cardboard box. A Black father learns to raise a white daughter after her mother vanishes.

All heartwarming stories. Most shared hundreds, even thousands of times. All completely made up.

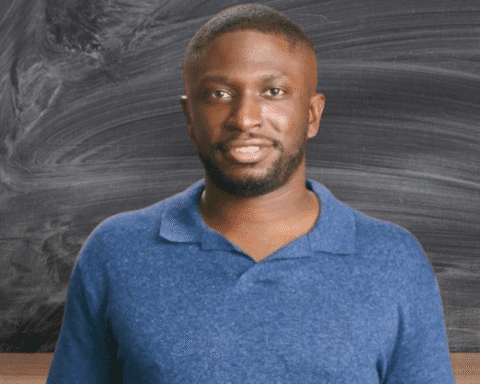

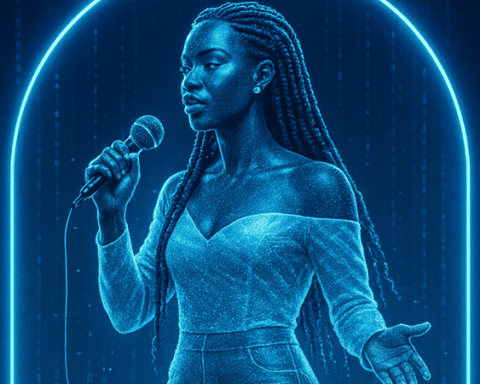

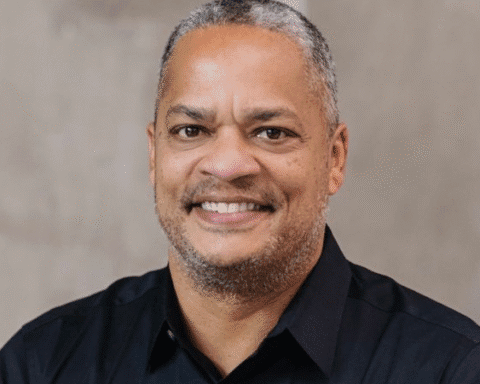

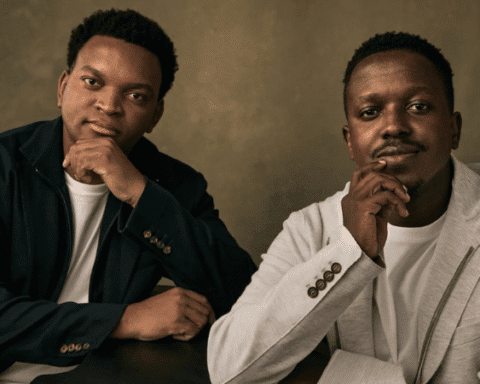

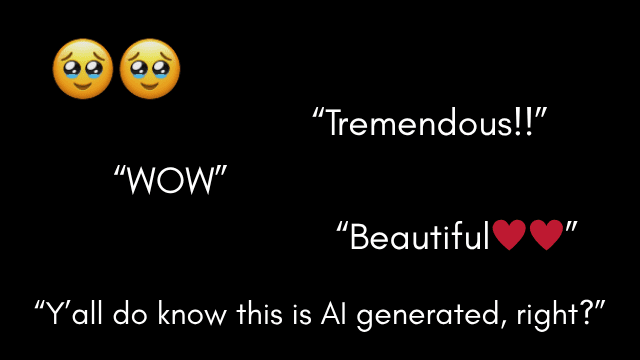

These viral posts aren’t just emotionally charged, they’re manufactured. The photos are AI-generated. The captions are fictional. The characters don’t exist.

Yet people across Facebook, Instagram, LinkedIn, and TikTok are commenting, liking, and sharing them as if they’re real.

The formula: fake families, real feelings

These stories follow a pattern. A dramatic setup. A moment of love. A redemptive ending.

Take “Rachel and Baby Elijah.” A 24-year-old white woman volunteers at a hospital nursery and decides to adopt a Black infant left in a box outside the ER. She raises him alone, works three jobs to pay for his piano lessons, and watches him grow into a Harvard-bound teenager.

At graduation, he gives a moving speech about how she saved his life, and ends with the line: “You’re still holding my hand, Mom. And I’ll never let go.”

Good stuff, right? It never happened.

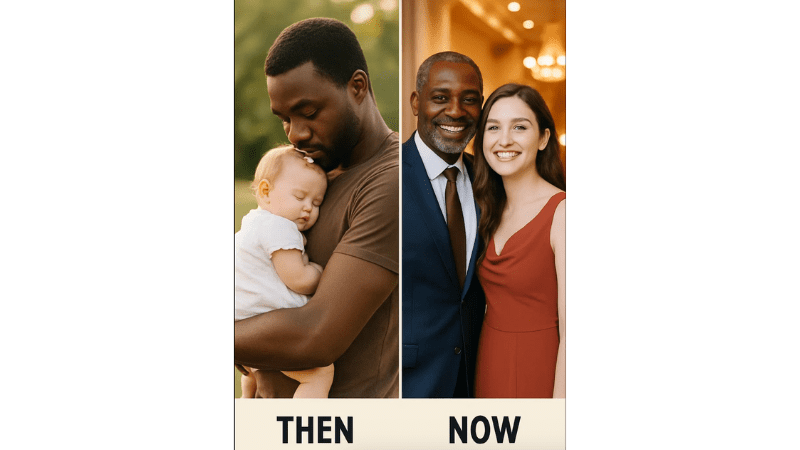

Another post features a young Black man named Jared, left to raise a white baby girl after her mother walks out just three weeks after birth. He doesn’t know how to braid hair or choose dresses, but he learns. He works two jobs, raises her alone, and whispers the same words every night: “You weren’t a mistake. You’re my miracle.” Years later, at her graduation, she tells him, “This is your degree too, Dad.”

It reads like a Hallmark movie. But again, none of it is real.

Each post is paired with a split “Then and Now” image — young parent and infant on one side, grown child and smiling adult on the other. They’re often interracial. Always perfectly lit. And entirely synthetic.

The AI slop economy, now dressed in emotion

In the tech world, this type of content is often referred to as AI slop — cheap, algorithm-chasing content created with minimal effort and maximum emotional payoff. It started with robotic narrations and slideshow clips. Now it’s evolving into something far more deceptive.

These posts aren’t just sloppy. They’re calculated. They exploit emotional triggers — abandonment, resilience, parenthood, racial reconciliation, and triumph — to grab your attention and keep you scrolling.

And they work.

Many of these accounts have built huge followings, sometimes in the millions, by delivering synthetic inspiration to audiences looking for relief in a chaotic world. These AI families are optimized for algorithms. Real families aren’t.

So what’s the harm?

Some might argue these stories are harmless. But they raise serious ethical concerns.

They exploit real dynamics like race, adoption, grief, and illness to build engagement and monetize attention. They often center trauma to drive clicks, and they crowd out real stories of resilience from people who don’t have the resources to generate and boost content with artificial polish.

These stories don’t just go viral because they’re convincing; they go viral because they tap into something deeper. In a society consumed by fear and outrage, people yearn for positive news.

These posts offer relief, even if it’s artificial. They remind us of the kind of love, connection, and resilience we want to believe still exists.

These AI families are optimized for algorithms. Real families aren’t.

Real stories still matter

This isn’t about cynicism. It’s about discernment in an era where virality often outpaces truth and ethics. Every time we engage online, we carry that responsibility.

There are real adoptive parents. Real single dads. Real people who defy expectations and show up with love, sacrifice, and resilience. Their stories deserve to be seen, shared, and celebrated.

I care about this because I tell real stories. Not to go viral, but to inform and inspire.

That’s the work I believe in.

How to tell if a story is real

Before liking or sharing, here are a few things you can do:

Check the profile. Is the page full of overly emotional stories with no sources? That’s a red flag.

Look at the photo. Are the faces a little too smooth? Hands distorted? Backgrounds strangely soft? These AI tells can be harmless—until they’re used to sell fake stories. That’s the real issue

Google the story. Can you find a real name? A credible source? A news report? If not, it may be fiction.

Look for a disclaimer. Some pages include one, but it’s often buried in the bio or “About” section that no one checks.

Don’t let the algorithm play with your emotions.